Comprehensive Threat Exposure Management Platform

Think of your AI strategy like building a skyscraper. You wouldn’t construct twenty floors and then try to figure out where the foundation should go. Security must be part of the blueprint from the very beginning. Bolting on security measures after an AI model is already in use is a recipe for disaster, leaving you vulnerable to data poisoning, model theft, and compliance failures. A solid governance framework provides the structure needed to innovate responsibly, setting clear rules for everyone. This article provides that blueprint, outlining the practical steps for securing generative AI in enterprises by embedding security into every stage of the development and deployment lifecycle.

Adopting generative AI isn’t just about plugging in a new tool; it’s about understanding and preparing for a new class of security challenges. While the potential for innovation is huge, these AI systems introduce unique vulnerabilities that can expose your organization if left unchecked. Before you can build a secure AI framework, you need a clear picture of what you’re up against. From sensitive data leaks to sophisticated social engineering, the risks are varied and complex. Getting familiar with these threats is the first and most critical step in creating a strategy that protects your data, your models, and your reputation. Let’s walk through the top security risks you need to have on your radar.

One of the most immediate concerns with enterprise AI is protecting sensitive information. When employees use generative AI tools, they might inadvertently input confidential data—like customer PII, internal financial reports, or proprietary code—into the model’s prompts. This data can then be used to train the model, potentially exposing it to other users or the AI provider. Securing these systems is crucial for maintaining data privacy and preventing leaks. Without strict controls and employee training, your most valuable information could easily walk out the digital door, creating significant compliance and security incidents.

Your AI models are valuable intellectual property. Attackers can target them directly, aiming to steal the model’s architecture and training data. They might also attempt to tamper with the model through data poisoning, where they intentionally feed it malicious data during the training phase. This can corrupt the model, causing it to produce inaccurate or harmful outputs. For instance, a tampered model used for code generation could start suggesting insecure code snippets, introducing vulnerabilities directly into your applications. Effectively managing gen AI risks means protecting the integrity of the models themselves, not just the data they process.

Generative AI gives threat actors powerful tools to create highly convincing and personalized attacks at an unprecedented scale. They can use AI to generate deepfake videos or audio for impersonating executives or craft flawless phishing emails that are tailored to specific individuals, making them much harder to detect. The ability to automate social engineering attacks means your employees will face more sophisticated and frequent threats. Traditional security awareness training may not be enough to defend against AI-powered phishing campaigns that can perfectly mimic trusted communication styles and contexts, making robust technical controls more important than ever.

The output of a generative AI model is entirely dependent on its input. Attackers can exploit this through techniques like prompt injection, where they craft specific inputs to trick the model into bypassing its safety controls or generating malicious content. This could include anything from hate speech and misinformation to code that executes a cyberattack. Furthermore, if a model is trained on a large volume of biased data, it can produce an improperly biased output, leading to skewed business insights, discriminatory decisions, and reputational damage. Ensuring the reliability and fairness of AI outputs is a critical security challenge.

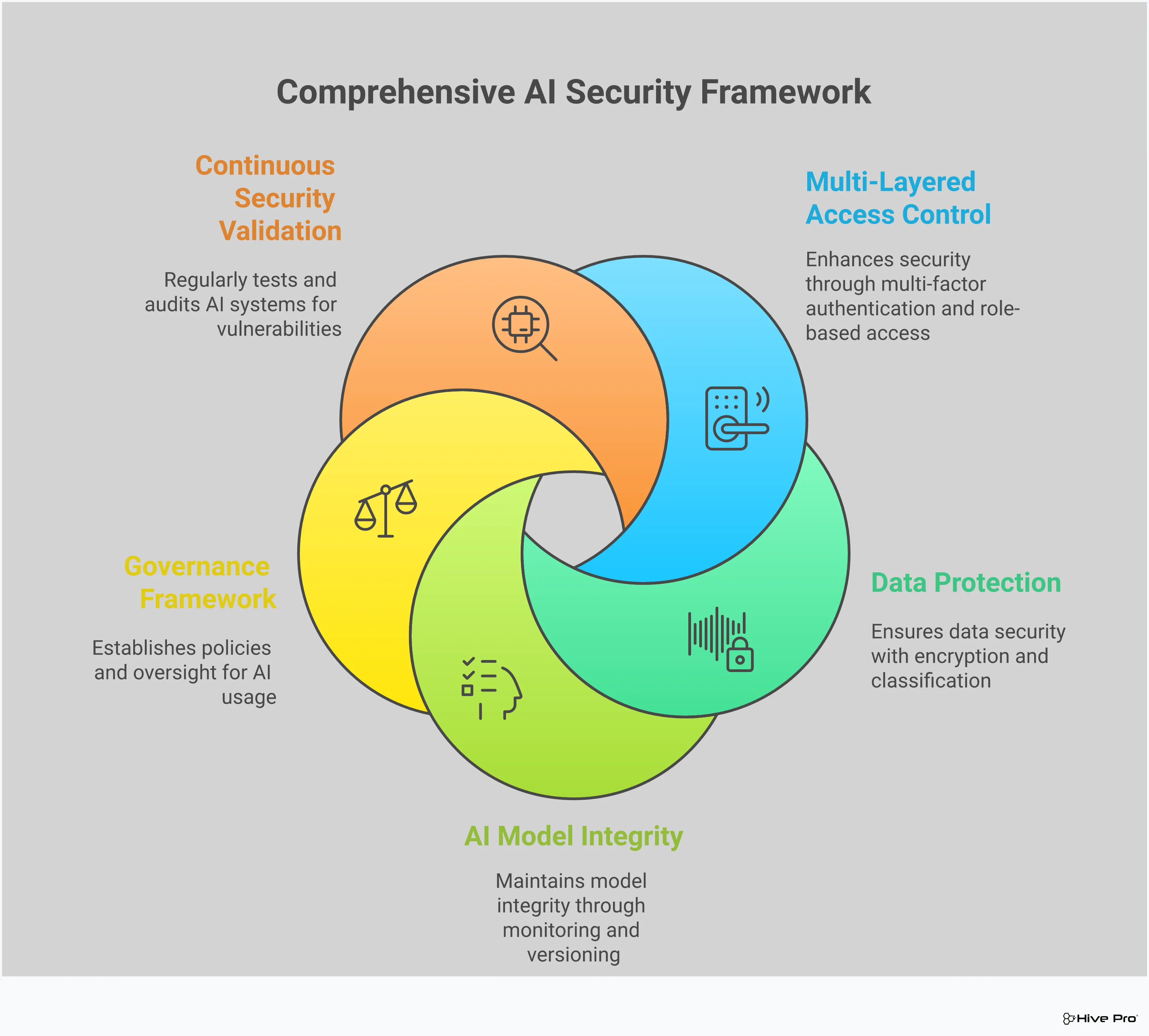

Before you can build a comprehensive AI governance framework, you need a solid foundation. Implementing essential security controls is the first practical step toward securing your generative AI initiatives. These controls act as your first line of defense, protecting your data, models, and infrastructure from common threats. Think of them as the locks on your doors and windows—fundamental security measures that must be in place to deter intruders. By focusing on access, encryption, model integrity, and monitoring, you can create a resilient security posture that addresses the most immediate risks associated with enterprise AI adoption. This proactive approach helps you move from a reactive stance to one of confident, strategic action.

Controlling who can access your AI tools and the data they use is non-negotiable. Start by enforcing strong authentication measures across the board. Multi-factor authentication (MFA) should be the standard for any user accessing AI systems, especially those handling sensitive information. Beyond that, operate on the principle of least privilege: grant employees access only to the data and AI functionalities they absolutely need to perform their jobs. Regularly auditing these permissions ensures that access levels remain appropriate as roles change, preventing unnecessary exposure and reducing the risk of insider threats, whether accidental or malicious.

Data is the fuel for generative AI, and it needs to be protected at all times. Implementing robust encryption is one of the most effective ways to safeguard your information. This means encrypting data both at rest—when it’s stored in databases or file systems—and in transit, as it moves between your systems and the AI models. Encrypting your training datasets, user prompts, and the AI-generated outputs ensures that even if a system is breached, the underlying data remains unreadable and useless to an attacker. This simple yet powerful control is a critical component of any data protection strategy and helps you protect trade secrets and customer information.

Your AI models are valuable intellectual property and can be targets themselves. One of the most significant threats is data poisoning, where an attacker intentionally feeds the model malicious or skewed data during the training phase. This can corrupt the model, introduce biases, or cause it to generate harmful outputs. To counter this, you must secure the integrity of your training data. This involves carefully vetting your data sources, cleaning and validating datasets before use, and monitoring the training process for any unusual patterns. Protecting your data pipeline is essential for building AI systems that are not only powerful but also trustworthy and reliable.

You can’t protect what you can’t see. Establishing real-time monitoring and logging for your AI systems is crucial for detecting and responding to threats as they happen. Continuous monitoring gives you visibility into how your AI tools are being used, who is accessing them, and what data is being processed. This allows your security team to spot anomalous behavior, such as an unusual volume of queries or attempts to access restricted data, which could indicate a breach or misuse. A platform that provides a unified view of cyber risks can help you keep a close watch on your AI environment, enabling you to identify potential incidents early and prevent minor issues from becoming major crises.

Think of an AI governance framework as the essential rulebook for how your organization uses artificial intelligence. Without one, you’re essentially letting employees experiment with powerful tools without any guardrails, which can open the door to significant security and compliance risks. A solid framework doesn’t stifle innovation; it provides the structure needed to innovate responsibly. It sets clear expectations for everyone, from developers to end-users, ensuring that your use of generative AI aligns with your security posture, business goals, and legal obligations.

Building this framework involves creating clear policies, assessing risks, defining compliance needs, and setting technical standards. This proactive approach helps you manage the entire lifecycle of AI systems securely. By establishing governance from the start, you create a foundation of trust and control, making it easier to adopt new AI technologies confidently. It’s about moving from a reactive stance on security to a proactive one, where potential issues are identified and addressed before they become critical incidents. A well-defined governance structure is a key part of a mature cybersecurity strategy.

Your first step is to create and communicate clear, easy-to-understand policies for AI use. These guidelines should explicitly state what is and isn’t acceptable. For instance, your policy should detail which types of company data can be entered into generative AI tools and which are strictly off-limits, like customer PII or proprietary code. It’s also wise to maintain a list of approved and vetted AI applications that employees are permitted to use.

Of course, a policy is only effective if it’s enforced. You need to establish control mechanisms to monitor the use of AI systems and ensure compliance. This isn’t about micromanaging your teams; it’s about protecting sensitive information. Clearly outline the consequences for policy violations and apply them consistently to reinforce the importance of secure AI practices across the organization.

You can’t protect against risks you don’t know exist. That’s why establishing regular risk assessment protocols for all AI tools is critical. This process involves identifying potential security threats—like data leakage, model poisoning, or prompt injection attacks—and evaluating their potential impact on your organization. Your security team should conduct regular audits of AI implementations to find and fix vulnerabilities before they can be exploited.

Strengthening your preventive measures is a key part of this process. This includes enforcing multi-factor authentication (MFA) for AI tools that handle sensitive data and deploying AI-specific data loss prevention (DLP) solutions. By continuously evaluating your AI systems, you can better understand your exposure and focus your efforts on the most significant threats. This aligns with a modern approach to vulnerability and threat prioritization, helping you manage your attack surface effectively.

Generative AI doesn’t operate in a legal vacuum. Your governance framework must be built on a solid understanding of your compliance obligations. Lax data security can lead to the public exposure of trade secrets, intellectual property, and sensitive customer data, resulting in severe financial and reputational damage. Your legal and compliance teams should be involved in defining how regulations like GDPR, CCPA, and HIPAA apply to your AI usage.

Start by mapping out how data flows into and out of your AI models. Identify where personal or regulated data is being used and ensure you have the proper consent and security measures in place. Documenting these data flows and compliance controls is essential for demonstrating due diligence and preparing for potential audits. This ensures your AI initiatives support your business without introducing unnecessary legal risk.

Finally, your framework needs to include concrete security standards for protecting the data that fuels your AI models. These are the technical ground rules that your teams must follow. Three of the most critical standards to implement are data classification, anonymization, and encryption. Data classification involves labeling your data based on its sensitivity, which helps determine the level of protection it requires.

For highly sensitive information, use anonymization or pseudonymization techniques to remove personally identifiable information before it’s used to train or query a model. And most importantly, enforce encryption for all data, both when it’s stored (at rest) and when it’s being transmitted (in transit). These measures are fundamental preventive controls that drastically reduce the risk of a data breach and should be considered non-negotiable for any generative AI system.

Your generative AI models are only as good—and as secure—as the data they’re trained on. Protecting this data throughout its lifecycle is fundamental to preventing leaks, maintaining privacy, and ensuring your AI tools are a business asset, not a liability. A proactive approach to data protection involves more than just putting up a firewall; it requires a multi-layered strategy that addresses how data is classified, handled, stored, and used. By implementing robust data protection measures, you create a secure foundation for your AI initiatives, building trust with both customers and internal teams. Let’s walk through four practical strategies that will help you safeguard your most valuable digital assets from exposure and misuse.

Before you can protect your data, you need to know what you have. Start by classifying your information based on its sensitivity level—public, internal, confidential, or restricted. This process helps you determine the appropriate security controls for each data type. For instance, customer PII or proprietary trade secrets require much stricter handling than public marketing materials. Data classification, combined with anonymization and encryption, serves as a critical first line of defense. It ensures you apply the right level of protection where it’s needed most, preventing sensitive information from accidentally being fed into an AI model.

Once you know what data you have, you need to make sure it’s safe to use for training AI. This is where data sanitization comes in. Implement a thorough process to identify and remove any sensitive or personally identifiable information from your datasets before they are used to train a model. This isn’t just about deleting a few columns; it’s about using techniques like data masking or tokenization to protect privacy while preserving the data’s utility. This step is crucial for minimizing the risk of data leakage and ensuring you comply with privacy regulations.

Your curated training datasets are a high-value target for attackers. You need to protect them at all costs. Encrypt your datasets both at rest and in transit, and secure all connections to them. This is a non-negotiable baseline. Beyond encryption, you should enforce robust security policies and access controls around your most sensitive data. A platform that provides a unified view of cyber risks can help you identify and prioritize threats to your data repositories, allowing you to focus your defensive efforts on the most critical vulnerabilities and protect the core of your AI systems.

Data protection doesn’t stop once the model is trained. You also need to manage the data that goes into the AI and what comes out of it. Your AI use and security policies should be crystal clear about what information employees can and cannot include in queries to chatbots or other generative AI tools. For example, explicitly forbid entering customer data, internal financial figures, or any other confidential information. Setting these guardrails prevents accidental data exposure through user interactions and ensures the AI doesn’t generate outputs that reveal sensitive details it learned during training.

Building secure AI is a lot like building a secure house—you can’t just add locks to the doors after it’s finished and hope for the best. Security needs to be part of the blueprint from day one. Integrating security practices throughout the entire AI development lifecycle is the only way to create models that are resilient, trustworthy, and safe for your organization to use. This means thinking about potential threats before you write a single line of code and continuing to test and monitor long after the model is deployed. By embedding security into every stage, you move from a reactive stance to a proactive one, catching issues early and building a stronger defense against emerging AI threats.

The security of your AI model begins with the data it learns from. During the development phase, your top priority is to protect this foundational data. Start by classifying information to identify what’s sensitive and requires the highest level of protection. From there, you can apply techniques like anonymization to strip out personally identifiable information and encryption to safeguard the data both at rest and in transit. These preventive measures are critical for securing your generative AI systems from the inside out. Treating your training data with this level of care ensures you aren’t accidentally introducing massive vulnerabilities before your model even goes live.

When you’re ready to move your AI model from a development environment to a live one, you need to secure the entire ecosystem around it. This involves more than just the model itself. You must encrypt the final data sets and all connections that feed into and out of the AI system. This protects your organization’s most sensitive information from being intercepted. You should also implement robust security policies and access controls to govern how the model is used. A key step is to validate your security controls to ensure they work as expected in a real-world setting, closing any gaps before they can be exploited.

AI systems are not static; they evolve as they process new data, and the threat landscape around them changes just as quickly. That’s why one-time security checks aren’t enough. You need to implement a continuous testing strategy to maintain your AI’s integrity over time. This includes conducting regular security audits of your AI tools and their implementations. By continuously scanning your full attack surface, you can identify new vulnerabilities as they appear. This ongoing process helps you prioritize threats effectively and strengthen your preventive measures, ensuring your AI remains a secure asset rather than becoming a liability.

Even with the best defenses, you have to be prepared for a security incident. Without proper governance and supervision, your use of generative AI can create significant legal and reputational risks. That’s why having a well-defined incident response plan is non-negotiable. This plan should be tailored specifically to AI-related incidents, such as data poisoning, model theft, or the generation of malicious output. Outline clear steps for containment, investigation, and remediation. Knowing exactly who is responsible for what and how to communicate during a crisis will help you manage the situation effectively and minimize potential damage.

Even the most advanced security tools can’t protect you if your team isn’t on board. Building a security-first culture around AI is about making security a shared responsibility, not just a task for the IT department. It means embedding security awareness into every decision your teams make when they use, develop, or purchase AI tools. When people are your first line of defense, you need to equip them with the right knowledge and clear guidelines to make smart choices.

This cultural shift starts from the top down. Leadership must champion the importance of secure AI practices and provide the resources for training and enforcement. To ensure the security of sensitive work data when utilizing generative AI systems, organizations must establish strict guidelines and control mechanisms. This involves creating a framework where employees understand the risks, know their responsibilities, and feel empowered to raise concerns. A strong security culture transforms your team from a potential liability into your greatest security asset, helping you proactively manage your total attack surface before threats emerge.

Your employees are interacting with AI every day, whether they realize it or not. That’s why continuous training is non-negotiable. Your training program should go beyond a one-time webinar and cover the practical risks of using generative AI. Teach your teams how to spot sophisticated, AI-powered phishing emails and what types of company data should never be entered into public AI models. Create clear, easy-to-understand policies on acceptable AI use and make sure everyone knows where to find them. As AI technology changes, so should your training, keeping your team prepared for new threats.

Not everyone in your organization needs access to the same AI tools or the sensitive data they process. Implementing role-based access control (RBAC) is fundamental to limiting your exposure. By following the principle of least privilege, you grant employees access only to the information and AI functionalities essential for their specific roles. This approach minimizes the potential damage if a user’s account is compromised. You can strengthen these preventive measures with multi-factor authentication and regular security audits of AI tool implementations to ensure that access levels remain appropriate and secure over time.

When it comes to AI security, ambiguity is the enemy. You need to clearly define who is responsible for what. Without proper governance and supervision, a company’s use of generative AI can create or exacerbate legal and security risks. Establish a dedicated AI governance committee with members from security, legal, IT, and key business units. This team will be responsible for setting policies, reviewing new AI tools, and managing incident response. By assigning clear ownership, you ensure that security oversight doesn’t fall through the cracks and that everyone understands their part in protecting the organization.

Your security is only as strong as your weakest link, and that often includes third-party vendors. Before integrating any external AI tool, you need a rigorous vetting process. Your AI use and security policies should specifically mention what data can and cannot be included in queries to third-party platforms. Create an approved list of AI vendors and tools, and establish clear guidelines for when and how they can be used. This helps you maintain control over your data and reduces the risk of introducing vulnerabilities from outside sources, allowing you to focus on vulnerability and threat prioritization within your own environment.

Using generative AI in your enterprise doesn’t give you a pass on legal and regulatory duties. In fact, it adds new layers of complexity. Meeting compliance requirements is a foundational part of any AI security strategy, helping you build trust with customers and avoid steep penalties. It’s about more than just checking boxes; it’s about integrating responsible, lawful practices into every stage of your AI lifecycle. Staying on top of the evolving legal landscape is crucial for protecting your organization from legal risks and ensuring your AI initiatives are built on solid ground.

When you use generative AI, you have to be meticulous about how you handle data to follow the rules and protect privacy. Long-standing data protection regulations like GDPR and CCPA absolutely apply to the data you feed into and get out of AI models. At the same time, new laws specifically targeting AI are being developed and passed around the globe. Your team needs to understand which regulations apply to your operations, especially concerning personal data, and ensure your AI systems are designed to comply from the start. This means having clear data handling policies and being transparent about how AI uses personal information.

Compliance isn’t a one-size-fits-all challenge. If you’re in a regulated industry like healthcare or finance, you already follow strict standards like HIPAA or PCI DSS. These rules extend to your use of AI. You must ensure that any AI application handling sensitive patient or financial data meets these specific requirements. The global landscape of AI-related policies is changing quickly, so it’s essential to monitor these developments. Your compliance strategy must be agile enough to adapt as new industry-specific guidelines and national laws emerge, ensuring your AI projects remain compliant over time.

If you can’t prove you’re compliant, you aren’t. Thorough documentation is your best defense in an audit and a cornerstone of good governance. You should document your processes using tools like “Data Cards” to detail how and where you collect training data, and “Model Cards” to explain your model’s performance, limitations, and intended use. When working with third-party models, always ask for their documentation or ensure your contracts include clauses that cover data protection and transparency. This paper trail is essential for accountability and demonstrates a commitment to responsible AI development and deployment.

Without proper governance, your use of generative AI can create significant legal and financial risks. As PwC notes, lax data security can lead to the public exposure of trade secrets and sensitive customer data. The best way to manage this is to be audit-ready at all times. This means having your documentation in order, your policies clearly defined, and your controls tested. A platform that provides a unified view of cyber risks can be invaluable here, giving you the visibility needed to demonstrate due diligence. Proactive audit preparation shows that your organization is serious about managing AI risks and protecting its assets.

Building a strong security posture for generative AI isn’t about a single solution; it’s about creating a resilient, multi-layered defense. Think of it as a continuous cycle of improvement rather than a one-and-done project. As AI technology evolves, so do the threats. Your security strategy needs to be just as dynamic. This means moving beyond simply reacting to incidents and adopting a proactive stance that anticipates and mitigates risks before they can cause damage.

A robust AI security posture integrates advanced technical safeguards with proactive risk management and rigorous testing. It’s supported by a culture that understands security is a shared responsibility. By focusing on these key areas, you can build a framework that not only protects your data and models but also enables your organization to innovate with confidence. A unified view of your cyber risks is essential for seeing how these different layers work together, helping you focus on the threats that matter most. Let’s walk through the practical steps you can take to fortify your defenses.

Start by focusing on the fundamentals of data protection. You need to encrypt the data sets used to train your AI models, as well as all connections to and from them. This ensures that even if data is intercepted, it remains unreadable and secure. Beyond encryption, it’s crucial to protect your organization’s most sensitive information with strong security policies and controls. This isn’t just about technology; it’s about defining clear rules for how data can be accessed, used, and shared within AI systems. These measures form the first line of defense for your AI infrastructure, safeguarding the valuable data that fuels your models.

To use generative AI safely, your organization must establish strict guidelines and control mechanisms from the outset. Without proper governance and supervision, you open the door to significant legal and compliance risks. Proactive risk management means identifying potential security gaps in your AI workflows before they can be exploited. This involves regularly assessing how your teams are using AI tools and what kind of data they are inputting. By creating a formal process for risk management, you can transform your security approach from reactive guesswork into confident, proactive exposure reduction.

Policies and controls are only effective if they work as intended. That’s why consistent security testing is non-negotiable. You should regularly conduct security audits of your AI implementations to verify that your controls are properly configured and enforced. Strengthen your preventive measures by implementing enhanced access controls like multi-factor authentication (MFA) and deploying AI-specific data loss prevention (DLP) tools. To truly understand your defenses, you need to test them against real-world attack scenarios through adversarial exposure validation, which helps you find and fix weaknesses before an attacker does.

The rise of generative AI is creating new vulnerabilities across your entire organization, which highlights the need for a continuous response to evolving threats. The security landscape is not static, and your defense strategy can’t be either. Concerns over risks like insecure AI-generated code mean you need ongoing vigilance and adaptation. Stay informed about the latest AI-related threats and vulnerabilities by subscribing to threat advisories and regularly updating your security protocols. Fostering a mindset of continuous improvement ensures your AI security posture remains strong and resilient against future challenges.

What’s the most critical first step my organization should take to secure generative AI? Before you get into the technical controls, your most important first step is to establish a clear and simple AI usage policy. You need to define the rules of the road for your employees, outlining exactly what kind of company data is and is not permitted in public or internal AI tools. This single document provides immediate guardrails, helps prevent accidental data leaks, and sets the foundation for your entire AI security strategy.

How is securing AI different from the cybersecurity practices we already have in place? While many core principles of cybersecurity still apply, AI introduces entirely new areas of risk. Traditional security focuses on protecting networks, endpoints, and applications. AI security expands that to include the models themselves, which can be stolen or manipulated through data poisoning. It also addresses unique threats like prompt injection attacks and the risk of employees inadvertently leaking sensitive data through their conversations with an AI.

My employees are already using public AI tools. What should I do now? The first thing is not to panic or issue a blanket ban, which can drive usage underground. Instead, start by getting a handle on which tools are being used and for what purpose. You can then perform a risk assessment on those platforms, create an approved list of vetted tools, and immediately roll out training that focuses on responsible data handling. It’s about guiding existing behavior toward safer practices, not just stopping it.

Who is ultimately responsible for AI security—the security team, developers, or the employees using the tools? AI security is a shared responsibility, and a strong security culture makes that clear. The security team is responsible for creating the governance framework, setting policies, and providing the right tools. Developers are responsible for building and deploying AI systems securely. And every employee who uses an AI tool is responsible for following the guidelines and protecting company data. When everyone understands their role, your defenses become much stronger.

How can we implement these security measures without slowing down our teams who want to innovate with AI? Think of a good security framework not as a set of brakes, but as guardrails on a highway. It doesn’t stop you from moving forward; it keeps you from going off a cliff. By establishing clear policies, providing a list of approved tools, and integrating security into the development process from the start, you give your teams a safe environment to experiment and innovate. A solid foundation actually allows you to adopt new AI technologies more confidently and quickly in the long run.